LINQ to Database Performance hints

Although I’ve been writing primarily about RX here for a while, I still dig into some good old LINQ to SQL / EF quite a bit. Along the way, I’m frequently finding more tidbits to throw in the toolbox and thought I’d share some observations from a recent performance tuning project. (I’ll avoid disclosing the client and will change the database model to Northwind for this discussion protect the guilty.)

In this project, I was brought in at the end of the project as things started going south to try to get it across the finish line. As with many projects, they waited until after the development was complete before they started to consider the performance impact of their code. This violates one of the principal tenants of performance testing:

RULE 1: Test early and often

Performance for larger scale apps needs to be considered from the beginning of the project and measured. If you don’t have an ongoing way of measuring the performance as the application progresses through the development lifecycle, you will find it difficult to pinpoint specific performance bottlenecks over time.

As an example, several years ago I met with core members of the Microsoft CLR team. They shared the fact that they compare performance on each night’s builds and if they find more than a couple microsecond deviations in the performance, the teams were required to identify the source of the performance degradation. When working with systems that have millions of lines of code, if the waited a week, it may become hard to impossible to identify the multiple places where performance has now dropped by 1/100th of a second. Over time these performance penalties build up.

I don’t mean to indicate that your Mom & Pop web site needs to live up to this stringent of a testing standard. Also, I’m not insinuating that you should prematurely over optimize your code, but try to make intelligent decisions and have ways of determining when your decisions negatively impact your performance up front.

Why do I bring this up in the context of LINQ to DB (SQL/EF)? If you’re not testing and monitoring your app, you may find that a nice abstraction layer that you added just killed your performance. In the case of the client’s application, they had nicely abstracted away the ability to load various database structures into a generic repository. They had separate methods to GetCustomerById, GetOrdersForCustomerId, GetOrderDetailsForOrderId, etc. They also had helper methods for validation including ValidateIsCustomerNameUnique. The downside here is that reading through the code, it wasn’t easy to notice where hidden database calls were being sprinkled through the code. This brings up rule number 2 for performance testing with databases:

RULE 2: Profile your application

Profiling your application for external requests, including services is essential to make sure the requests you are making are not causing a performance bottleneck. I highly recommend using some sort of profiling tool, particularly for people who are new to LINQ. LINQ makes it easy to make silly mistakes like n+1 requests to a database when navigating lazy-loaded parent-child relationships.

There are plenty of options for profiling applications. I identified some of them back in my LINQ Tools post. Some of these require adding code to your assemblies, while others simply attach to running processes.

If you have a license for SQL Server, you probably have access to SQL Profiler. To use this tool, create a new profile pointing it to your database and run your application. Once you have executed the code you want to profile, you can view all of the requests made against that database. Pay particular attention to cases where the same SQL is issued multiple times in succession or sequence. The downside here is that you will need to manually trace through your code base to find where each request is made in order to fix the issues.

Another alternative if you have the Ultimate SKU of Visual Studio is to use the Intellitrace feature added in Visual Studio 2010. Using Intellitrace, you can not only identify which SQL requests were issued to the database. You can also select each line in the results and navigate to the line of code that caused the request to be issued. Let’s consider this fairly innocuous code snippet to output the Order date and Product Names associated with each order:

1: Using model As New NwindEntities

2: For Each o In model.Orders.Take(3)

3: Console.WriteLine(o.OrderDate)

4: For Each od In o.Order_Details

5: Console.WriteLine(od.Product.ProductName)

6: Next

7: Next

8: End Using

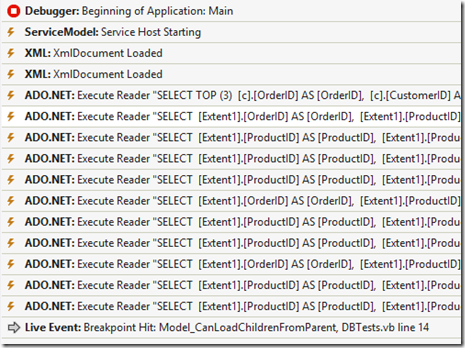

Running this and putting a breakpoint on line 8, we can see the following Intellitrace output:

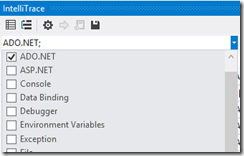

From here, we can see that as we iterate each order item, we are making a separate request to the database for each associated order detail record to get the related product object. Clicking on any of the lines of the output will take you directly to the line of the code that the request was made. Unfortunately, you won’t be able to view the parameters for each request using Intellitrace as you could with SQL Profiler, but that’s less important from a performance tuning perspective than it is to know that you have multiple excessive requests to the database. If you find that the intellitrace output becomes cluttered with non-database related trace items, I often find it helpful to filter the results to only ADO.Net requests:

Fortunately, fixing the code above only requires changing line 2 in the code above to eagerly load the child records:

For Each o In model.Orders.Include("Order_Details").Include("Order_Details.Product").Take(3)

We may want to further optimize this request by not hydrating the entire objects, but rather just fetching the rows that we want as well:

Using model As New NwindEntities Dim query = From o In model.Orders Select o.OrderDate, ProductNames = From od In o.Order_Details Select od.Product.ProductName For Each o In query.Take(3) Console.WriteLine(o.OrderDate) For Each p In o.ProductNames Console.WriteLine(p) Next Next End Using

If you don’t have access to SQL Profiler or Intellitrace, consider one of the other relatively inexpensive profiling tools out there like the MVC MiniProfiler, ORM Profiler, Huagati’s LINQ to SQL Profiler, EF Prof, or at a bare minimum checking the generated code for your queries using LinqPad.

With your application now profiled, hopefully you won’t run into the issues such as found in the following code (which I did see in the customer’s app changing the model to again protect the guilty:

Debug.WriteLine(Customers.FirstOrDefault().CompanyName) Debug.WriteLine(Customers.FirstOrDefault().ContactName) Debug.WriteLine(Customers.FirstOrDefault().ContactTitle) Debug.WriteLine(Customers.FirstOrDefault().Phone) Debug.WriteLine(Customers.FirstOrDefault().Fax) Dim CustomerOrders = Customers.FirstOrDefault().Orders For Each order in CustomerOrders ' Load the order into a POCO Next

Quick quiz boys and girls, without using a profiler how many requests are we making to our database here? Remember that not only will you have a database hit for each call to FirstOrDefault which doesn’t use deferred loading, but you’ll also get a call on GetEnumerator (called internally in the For Each iteration). Thus the count is 7 for the above code, right? Actually, it’s worse because hidden behind the Debug.WriteLine is a trace writer which also writes the value to the database’s log table. As a result we actually have 12 database requests (7 reads and 5 writes) instead of the single query that we should have used. In this case we’re breaking rule #3:

RULE 3: Don’t fetch needlessly

In the above code, we can simply fetch our target customer once and automatically include their associated Orders as follows:

Dim firstCustomer = Customers.Include("Orders").FirstOrDefault() Debug.WriteLine(firstCustomer.CompanyName) Debug.WriteLine(firstCustomer.ContactName) Debug.WriteLine(firstCustomer.ContactTitle) Debug.WriteLine(firstCustomer.Phone) Debug.WriteLine(firstCustomer.Fax) Dim CustomerOrders = firstCustomer.Orders For Each order in CustomerOrders ' Load the order into a POCO Next

In this case we fetch the firstCustomer once and reuse it rather than calling FirstOrDefault repeatedly. We also use the .Include option to eagerly fetch the child records. In this case, I’m saddened that the original developer didn’t use a bit of brain power to eliminate those extra database hits FOR LOGGING PURPOSES, but assume that it was because they weren’t aware of when their database was being hit. Of course, this brings us back to rule 2 – profiling.

Simply removing excessive database hits will almost always improve your code performance. In one case, I had a situation where a request was taking 10 minutes. After removing the excessive hits, the performance came down to 10 seconds, which was a definite improvement. However, 10 seconds still does not make the application web scale as the database’s CPU is pegged for that amount of time. Sometimes, it is actually best to break the process up a bit to improve performance.

RULE 4: Break up complex queries

To give you an idea of the kind of query that we’re talking about here, consider the following case where we are fetching the employees in USA including their regions and sales information.

Dim empOrders = From e In Employees Where e.Country = "USA" Select e.FirstName, e.LastName, Regions = From t In e.Territories Select t.Region.RegionDescription Distinct, TopThreeSales = From o In e.Orders From od In o.OrderDetails Select od.Product.ProductName, TotalSale = od.Quantity * od.UnitPrice Order By TotalSale Descending

While this code will compile and run, the performance will start to suffer as larger volumes of data are added to the database. The reason is that both Territories and Orders are child collections from Employees. As a result, SQL will return the Cartesian product between the two sets. In other words, if a single employee is associated with 5 territories and has sold 10 products, the total number of rows returned would be 50. The OR/M is then responsible for splitting out those results again into the correct groupings. If you multiply this against 10,000 employees, you will find that there is a massive amount of excess data that is returned.

In this case, It may be helpful to split your sub queries up into separate database requests. You could do something like the following using the Contains clause to pass the Id’s of the parent record in to the subsequent queries.

Dim empOnly = (From e In Employees Where e.Country = "USA" Select e.EmployeeID, e.FirstName, e.LastName). ToList() Dim EmpIds = empOnly.Select(Function(e) e.EmployeeID) Dim TopThreeSales = From o In Orders Where EmpIds.Contains(o.EmployeeID) From od In o.OrderDetails Select o.EmployeeID, od.Product.ProductName, TotalSale = od.Quantity * od.UnitPrice Order By TotalSale Descending

However “Contains” has a couple of hidden surprises that limit our use here. First if you use Contains, you can not make it into a compiled query because the number of parameters vary at run-time. Second, if you have more than 2100 items in your EmpIds collection, you will run into a hard limit in SQL Server which only allows up to 2100 parameters. In this event, it is better to re-apply the original filter and return the new result sets for each of the subsequent queries. In the end we can join the separate queries back together again using LINQ to Objects:

Dim empOnly = (From e In Employees Where e.Country = "USA" Select e.EmployeeID, e.FirstName, e.LastName). ToList() Dim Regions = (From e In Employees Where e.Country = "USA" From t In e.Territories Select e.EmployeeID, t.Region.RegionDescription Distinct). ToList() Dim TopThreeSales = (From e In Employees Where e.Country = "USA" From o In e.Orders From od In o.OrderDetails Select o.EmployeeID, od.Product.ProductName, TotalSale = od.Quantity * od.UnitPrice Order By TotalSale Descending). ToList() Dim combined = From e In empOnly Select e.FirstName, e.LastName, EmpRegions = Regions.Where(Function(reg) e.EmployeeID = reg.EmployeeID), Sales = TopThreeSales.Where(Function(sale) e.EmployeeID = sale.EmployeeID)

In the above referenced project, I was able to take some queries that as a single LINQ statement took 10 seconds to run on the database down to sub-second requests. Your mileage may vary using this technique. If at all unsure, refer back to Rule 2: Profile.

RULE 5: Use ORM by default but Stored Proc where necessary

At times you will find that the generated query is too complex and the database has issues trying to parse the generated SQL. The complexity of your query, particularly the number of joins and depth of the object graph/inheritance model you are traversing can cause issues. In these cases, I have no objections to using Stored Procedures in order to wrangle the otherwise unruly queries. LINQ to DB is great for the 70-80% of crud operations, but there are times, particularly when reporting when you need something else. Thankfully LINQ to SQL and LINQ to EF both support consuming stored procedures when the need arises without the need to write the tedious and potentially error prone custom ADO code yourself.

In addition, LINQ is not a replacement for ETL operations. In one case, we had a situation where saving a single record with 2000 children caused the database to churn for 10 minutes due to 2001 individual hits to the database for the update process (on a single SaveChanges call). We re-wrote that operation using BulkCopy and brought the operation down to 1 second from 10 minutes.

RULE 6: Use Appropriate Sample Data

The last item for today is to make sure when developing to have representative quantities of data in your sample as you will have after a couple of years of production data enters your system. We found a number of cases where complex queries like I described in rule 4 above would perform fine in development where we had thousands of rows of data, but when we applied it against the production data which had millions of rows of data, the performance died. The complex joins which worked fine against smaller data sets no longer worked against the bigger sets. If we had a good approximation of data volumes in development, we would have been able to diagnose and fix this issue before shipping the version to production.

That’s just a couple of my rules of thumb which have helped me diagnose and fix performance issues with LINQ to SQL/EF. If you have any tricks or techniques to add, please let me know what you Thinq.